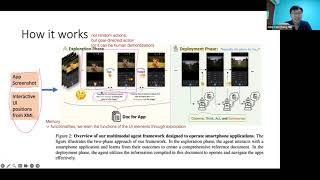

AppAgent: Using GPT-4V to Navigate a Smartphone!

Комментарии:

It is somewhat being mentioned to self-evaluate after each step, but it is important that once the AI has mentioned the UID number in its generation, before executing the next command (eg: click), a new screenshot shall be given, so that the AI can check if new position is correct before continuing, just like a human would check if his finger or mouse is on the correct position before pushing/clicking. That is to say a command like "click on button on coordinates X,Y" shall really be decomposed in 2 actions: 1/ "move to X, Y" [confirm position is correct] and then only [click]. Maybe this can be detailed in the system instructions given to AI.

Ответить

It is important that the documentation doesn't mean we lose all the information that helped create the documentation, and that this information (steps taken and self evaluation of success each step) is kept in a database, which can further be used to fine-tune the AI model used (eg: GPT4-vision, once this option is availlable if not availlable yet)

Ответить

How can I join your classes? Is there a better UI grounding model than SeeClick?

Ответить