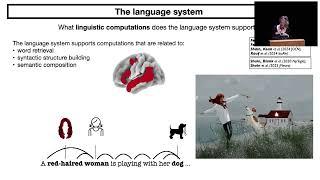

Evelina Fedorenko - Neural Net language models as models of language processing in the human brain

I seek to understand how our brains understand and produce language. Patient investigations and neuroimaging studies have delineated a network of left frontal and temporal brain areas that support language processing, and work in my group has established that this “language network” is robustly dissociated from both lower-level speech perception and articulation mechanisms, and from systems of knowledge and reasoning (Fedorenko et al. 2024a Nat Rev Neurosci; Fedorenko et al., 2024b Nature). The areas of the language network appear to support computations related to lexical access, syntactic structure building, and semantic composition, and the processing of individual word meanings and combinatorial linguistic processing are not segregated spatially: every language area is sensitive to both (e.g., Shain, Kean et al., 2024 JOCN). In spite of substantial progress in our understanding of the human language system, the precise computations that underlie our ability to extract meaning from word sequences have remained out of reach, in large part due to the limitations of human neuroscience approaches. But a real revolution happened a few years ago: a candidate model organism emerged, albeit not a biological one, for the study of language—neural network language models (LMs), such as GPT-2 and its successors. These models exhibited human-level performance on diverse language tasks, including those long argued to only be solvable by humans, and often producing human-like output. Inspired by the LMs’ linguistic prowess, we tested whether the internal representations of these models are similar to the representations in the human brain when processing the same linguistic inputs, and found that indeed LM representations predict neural responses in the human language areas (Schrimpf et al. 2021 PNAS). This model-to-brain representational similarity opens a lot of exciting doors to investigations of human language processing mechanisms (for a review see Tuckute et al., 2024 Ann Rev Neurosci). I will discuss several lines of recent and ongoing work, including a demonstration that LMs align to brains even after a relatively small amount of training (Hosseini et al., 2024 Neurobio of Lang), a closed-loop neuromodulation approach to identify the linguistic features that most strongly drive the language system (Tuckute et al., 2024 Nat Hum Beh), and work on the universality of representations across LMs and between LMs and brains (Hosseini et al., in prep.).

Комментарии:

Evelina Fedorenko - Neural Net language models as models of language processing in the human brain

Conference on Language Modeling

DOMAINE VESSIERE (bio) - Costières de Nïmes

Petit vin entre copains

ЯКИЙ БІЗНЕС відкрити з мінімальними вкладеннями та ЯК НЕ ПРОГОРІТИ у 2024

ХРИСТИНА СИТНИЧЕНКО

Басейн у будинках КМ «Нова Ходосівка».

Lavr Development

Эволюция монстров питомцев манга с озвучкой. Главы 322-328

Озвучка манги от Light fox

DEBUT DE SOIREE NUIT DE FOLIE

Flavien1969

Алексей Гоман "Потанцуй, пожалуйста"

MarinaTikhonova